The Dawn of the IT Industry – Why Look Back at the 1970s?

The 1970s was not just another decade in the history of the IT industry—it was a revolutionary era that forever changed the future of humanity by laying the foundations of the digital world as we know it. Like the Renaissance or the Industrial Revolution, this era brought about technological and social transformations whose impact is still felt in every smartphone, laptop, and online interaction. The uniqueness of the decade lies in the simultaneous birth of microprocessor technology, the modern foundations of operating systems, the concept of the personal computer, and the network communication that would evolve into the Internet—four pillars that support the entire infrastructure of today's information society.

Looking back at the 1970s is especially instructive because it was the last period when a single person could still grasp the entire field of computing, and when today's tech giants were still operating as garage startups. This era proves that the greatest revolutionary changes often arise during the toughest economic times—the innovations that today create trillions in economic value emerged amid oil crises and social tensions. The story of the seventies also shows how a narrow technological field can become a global phenomenon, permeating every industry and area of life, and becoming the driving force behind the next fifty years of economic and social development.

The Social and Economic Environment of the 1970s That Shaped the IT Industry

The economic crises and social changes of the 1970s paradoxically provided fertile ground for the IT revolution. Amid the economic difficulties triggered by oil crises, companies were forced to seek new ways to cut costs and increase efficiency. During this period, business leaders realized that data was not just an administrative tool but a resource of real business value—a mindset that directly foreshadowed today's big data era.

During the decade, a fundamental change occurred in the corporate perception of data: while previously it served only to automate routine tasks, by the 1970s it began to be treated as "raw material" from which valuable information could be extracted for management decision-making. The emergence of database management systems (DBMS), such as IBM IMS (1971) and Cullinane IDMS (1971), provided the technological foundation for this new approach, while professional journals like Datamation enthusiastically promoted the "database-based approach" among management. This cultural and technological transformation had such a profound impact that it fundamentally shaped the information technology development of the following decades and the modern understanding of the strategic value of data.

Early Developments in Computing: Laying the Foundations

The IT revolution of the 1970s had deeper roots than the breakthroughs of the era might suggest—it was actually built on computing foundations shaped over millennia. The first mechanical calculator, the abacus, appeared about 5,000 years ago, when humanity realized that increasing social complexity and trade required counting larger sets of items. With the emergence of specialization and division of labor, more efficient counting methods became necessary, leading to the development of number systems—most commonly the decimal system.

In the 19th century, Charles Babbage (1792-1871) formulated fundamental principles for programmable calculators that directly anticipated the architecture of the microcomputers of the 1970s. Babbage's requirements—punched card program control, separate input and output units, memory, and arithmetic unit—were almost perfectly realized in the Intel 8080-based systems of the 1970s. George Boole's logical algebra (1847-54) laid the groundwork for digital circuit design, while Konrad Zuse's Z3 machine (1938-1941) already included a processor, control unit, memory, and input/output units—practically all the essential components of modern computers. This historical continuity explains why the innovations of the 1970s were so quickly and widely adopted.

The Invention of the Microprocessor – The First Step Toward Personal Computers

The birth of the microprocessor took place between 1969 and 1971, when several companies worked in parallel to integrate the central processing unit of a computer into a single chip. Garrett AiResearch began developing the Central Air Data Computer (CADC) for fighter jet systems as early as 1968, resulting in the MP944 chipset in June 1970—a 20-bit, 28-chip system that technically preceded the later famous commercial microprocessors.

The real breakthrough, however, came with the Intel 4004, developed under the leadership of Federico Faggin for the Japanese Busicom calculator company. From the project's original seven-chip concept, Faggin and his team created a four-chip solution, including the 4-bit 4004 microprocessor, which contained 2,300 transistors and supported 46 instructions. The first shipments began in March 1971, but the first publication about the processor appeared only on November 15, 1971, in Electronic News. At the same time, Texas Instruments introduced its own TMS 1000 series on September 17, 1971—the first single-chip, commercially available microcontroller. This technological race and the subsequent microprocessor developments enabled the creation of fourth-generation personal computers from the mid-1970s, which became accessible to almost anyone due to their low price.

Intel 4004 and 8008 – How They Changed the World

The world-changing impact of the 4004 lay primarily in demonstrating the concept—it proved that it was possible to build complex integrated circuits on a single chip and to realize the capacity of the multi-ton ENIAC on a tiny chip. Although the 2,300-transistor processor, running at 740 kilohertz, was never a commercial success and Intel considered it a side project alongside DRAM production, it paved the way for the microprocessor revolution. Despite the 4004's market failure, development continued, resulting in the 8-bit 8008 in April 1972.

The 8008, however, wrote a completely different story: originally developed for Datapoint, but when the company could not pay for it due to financial problems, Intel claimed all intellectual property, including the instruction set. This fortunate turn laid the foundation for the x86 instruction architecture, which still dominates computing today. The 8008, priced at $120, offered an alternative for computer manufacturers, universities, and garage companies, laying the groundwork for the microcomputer boom. The chip, manufactured with 10-micron technology, led to the development of the 8080 family, which had triple the clock speed and a 16-bit address space.

The Reign of Mainframes and Their Role in Corporations

By the mid-1970s, corporate computing was almost exclusively organized around mainframe computers, which occupied huge, air-conditioned rooms and were the undisputed kings of corporate data processing. These systems operated in batch mode, reading data from punched cards and magnetic tapes, and were primarily used to automate back-office functions such as payroll, invoicing, and inventory management. After the dominance of the System/360, the early 1970s saw the emergence of the first interactive terminals (IBM 2741, IBM 2260), allowing hundreds of users to work on the system simultaneously.

The true power of mainframes lay in their ability to handle the most critical corporate applications with exceptional reliability and security. While microprocessors revolutionized smaller systems by the late 1970s, large corporations continued to rely on mainframes for areas such as banking, insurance, and healthcare. NASA, for example, used these machines intensively in the space program to solve complex computational problems, as depicted in the film Hidden Figures. This era laid the foundation for a dual development path, where mainframes remained the backbone of corporate infrastructure, while microprocessor-based systems gradually conquered the market for smaller organizations and individual users.

IBM and the Dominance of the 1970s in Business Computing

In the 1970s, IBM cemented its dominant position in business computing with the introduction of the System/370 series in 1970, following the revolutionary System/360. The company achieved an 85% market share in the US mainframe market during this decade, a dominance it maintained for the next three decades. This overwhelming position was no accident—IBM had already built unparalleled organizational capabilities in punched card data processing before the war, which perfectly matched the requirements of computer technology.

The real innovation of the System/360 and System/370 families was that every model could run the same software, allowing companies to upgrade seamlessly without rewriting their applications. IBM's strategic advantage was further strengthened by complementary technologies such as the CICS transaction processing monitor and the OS/VS1 and later MVS operating systems, which together ensured an almost monopolistic market share for the company. By the late 1970s, the IBM mainframe was not just a computer but synonymous with business computing worldwide—hence the nickname "Big Blue," which became part of the company's identity.

The Revolution of Operating Systems – The Birth and Significance of Unix

The peak of operating system development in the 1970s came in September 1969, when Ken Thompson wrote the first Unix kernel in a week on an unused PDP-7 computer at Bell Laboratories. Thompson, who had previously participated in the overly complex Multics project, deliberately chose a simpler approach—he wrote the kernel in a week, the shell in another week, and the text editor and assembler in subsequent weeks. The original "UNICS" (Uniplexed Information and Computing Service) name was Brian Kernighan's sarcastic idea, referring to a "castrated" version of Multics.

The real revolution, however, came in 1973, when Dennis Ritchie and Thompson rewrote the system in C. This rewrite was the technological breakthrough that changed the world of operating systems: previously, all systems were written in assembly language, tying them to specific hardware, but the C language was high-level enough to mask hardware differences while being low-level enough to allow highly efficient code. Due to AT&T's computer market ban, the company could not sell it for money, so it was distributed free with source code to universities. This decision—combined with the fact that the PDP-11 was the dominant university machine, while its operating system was terrible—triggered an avalanche effect, leading to endless "hacking" at hundreds of universities.

ARPANET, the Predecessor of the Internet – How the Connected World Began

In the late 1960s, when microprocessors and personal computers were still just concepts, the US Department of Defense was experimenting with a vision that became the foundation of today's global Internet. ARPANET (Advanced Research Projects Agency Network) was born on September 2, 1969, when Bolt, Beranek, and Newman installed the first Interface Message Processor (IMP) router at UCLA. The first real Internet message was sent on October 29, 1969, between UCLA and the Stanford Research Institute, marking the official birth of the Internet.

The revolutionary network was based on Paul Baran's theory of packet-switched communication, in which the data stream is divided into smaller packets, each carrying routing information and capable of restoring itself in case of transmission errors. This approach perfectly matched American military strategic considerations—to create a network that would remain operational even after a nuclear attack. The initial four endpoints (UCLA, Stanford, UC Santa Barbara, and Utah University) soon expanded, and in 1972 the first email application appeared, and in 1974 the term "internet" was first used in a study about the TCP protocol. After the transition to the TCP/IP protocol in 1983, ARPANET formally ceased to exist in 1989, giving way to more advanced backbone networks and laying the foundation for today's global Internet infrastructure.

The Emergence of the Personal Computer (PC) – The Story of Apple and Altair

The Altair 8800, released in 1974, was the first computer to truly launch the personal computing revolution. Marketed by MITS (Micro Instrumentation Telemetry Systems) in Albuquerque as a kit for $400, the primitive device had only a switch panel for input and LEDs for output, but its accessibility far outweighed this limitation—it was the first time that average enthusiasts could buy a personal computer.

The impact of the Altair went far beyond its direct commercial success, inspiring the most talented young programmers and engineers of the era. Among the members of the Homebrew Computer Club in California were Steve Wozniak and Steve Jobs, who, inspired by the Altair, developed the Apple I, which already had a keyboard and TV connection, making computing much more user-friendly. The Apple I was fundamentally different from the Altair: it ran on a 1 MHz 6502 MOS processor instead of Intel, and ultimately became the springboard for the development of the Apple II in 1977, which opened a new era of home computing. Meanwhile, the Altair also catalyzed Bill Gates and Paul Allen's development of a BASIC interpreter, which became Microsoft's first commercial product.

Steve Jobs and Steve Wozniak: Founding Apple and Early Innovations

The dynamic partnership between the two Steves began in mid-1971, when Bill Fernandez introduced them. Their business collaboration began that fall: Jobs sold Wozniak's "blue boxes" for $150 each, which allowed free long-distance phone calls. The early venture was so successful that they even managed to trick the Vatican, who thought they were talking to Henry Kissinger.

The choice of company name reflected Jobs's practical and marketing mindset: the name Apple Computer was not only friendly and non-technical, but the "A" would also precede Atari in the phone book. According to Jobs, "I was on one of my fruitarian diets and had just come back from an apple orchard" when the idea came to him. They raised the initial capital by Jobs selling his Volkswagen microbus and Wozniak selling his programmable Hewlett-Packard calculator, giving them $1,350. Jobs used the Byte Shop's first order of 50 units to secure a bank loan, which they used to build the machines in his parents' garage.

The Birth of Microsoft – Bill Gates and Paul Allen's Path to Becoming a Software Giant

The friendship formed at Lakeside School in Seattle foreshadowed the later software revolution: Gates and Allen realized as high school students that the possibilities in the world of computers went far beyond technological tools. Both learned BASIC programming via teletype terminal and already showed a shared interest in business—in 1972, they bought an Intel 8008 chip for $360 to build a computer for traffic measurement and founded their first company, Traf-O-Data.

The breakthrough came in 1975, when they developed a BASIC interpreter for the newly released Altair 8800 microcomputer, which MITS bought from them. The success was so significant that Gates dropped out of Harvard, Allen quit Honeywell, and they moved together to Albuquerque, where MITS was headquartered. The company, registered as Micro-Soft (later simplified to Microsoft), was named to reflect their mission: to meet the software needs of the microcomputer age. Gates was 19 and Allen 22 when they launched the company, which is now worth nearly $3 trillion and is the world's second most valuable publicly traded company.

The Development of Programming Languages in the 1970s – C and Other Cornerstones

The 1970s brought explosive development in programming languages, with many of the basic principles we still use today being established. Early in the decade, Niklaus Wirth developed the Pascal language, which was attractive to the founders of Apple for its simplicity. In 1972, however, Dennis Ritchie at Bell Telephone Laboratories developed the C language, based on his own B language, which brought such a revolutionary innovation that today's modern programming languages—such as C#, Java, JavaScript, PHP, Python, and Perl—are all based on it.

The uniqueness of C lay in its combination of low-level hardware programming and high-level language features, relying heavily on pointers and structured programming principles. In the 1980s, no entirely new principles emerged; instead, existing ones were combined: C++ in 1983, created by Bjarne Stroustrup, added object-oriented features to C, while in the US, the Ada language was developed for military purposes. The latter was named in honor of the first female programmer in history and became particularly useful in aviation, transportation, and space research, although its complex semantics made it difficult to learn.

The Database Revolution – The Emergence of Relational Databases

The database revolution of the 1970s began with Edgar Frank Ted Codd's 1970 paper "A Relational Model of Data for Large Shared Data Banks," which fundamentally changed the world of data storage and management. Codd's revolutionary idea was to organize data in tabular form using mathematical relations, as opposed to the earlier hierarchical and network models, which were inflexible and did not support ad-hoc queries.

The real breakthrough of the relational model was its great flexibility and the simplicity of tabular representation, which was closest to the average user's perspective. Unlike earlier hierarchical systems, where any structural change was costly, relational databases made database design, modification, and maintenance simple. In the new model, two-dimensional tables based on mathematical relations consisted of rows and columns, with columns describing properties. In practice, relational database managers made three important modifications to the theoretical model—relations became bags instead of sets, duplicate column names were not allowed, and NULL values were introduced—but these changes did not diminish the revolutionary nature of the model. By the late 1970s, the first commercial relational systems appeared, and the SQL query language gradually became a standard, laying the foundation for the dominance that still characterizes database management today.

The Floppy Disk and the Evolution of Data Storage – How Data Became More Mobile

The appearance of the floppy disk in 1967 fundamentally changed the concept of data mobility when IBM developed the first 8-inch (20.3 cm) version with 80 KB capacity. This innovative magnetic storage device evolved through three generations: the 5.25-inch version became the standard in the 1980s with capacities between 160 KB and 1.2 MB, while the most modern 3.5-inch disk ranged from 720 KB to 2.88 MB. The operating principle was simple: a magnetic coating was applied to a flexible strip, and data was written and read in binary code using electromagnets as the disk spun in the drive.

The real revolution of floppy disks was that they made it easy to transport data between computers, unlike the fixed storage devices of earlier eras. The ZIP disk, introduced in the 1990s as the successor to the 3.5-inch floppy, offered about 100 MB of capacity, but its high price and slow speed prevented widespread adoption. By the 2000s, optical storage took over: CDs with 640-700 MB capacity far surpassed floppies, and then DVDs with 4-9 GB and Blu-ray with 25-50 GB capacities finally replaced magnetic disks. The evolution of data storage was impressive: in 70 years, the cost of storing 1 megabyte dropped by 163 million times, and today we can store 500 terabytes of data on a palm-sized glass plate.

The Early Steps of the Video Game Industry – Atari and the Rise of Arcade Games

Computer Space, released in 1971, was the first commercial video game, inspired by the earlier Spacewar! computer game, but the real breakthrough came with Pong in 1972. The formative period of the video game industry lasted from 1971 to 1975, then declined from 1976 to 1978, before the "golden age" began with the release of Space Invaders in 1979. Atari became the world's first video game manufacturer, and when Nolan Bushnell sold the company to Warner Communications in 1976, the Atari 2600 (VCS – Video Computer System) was already being marketed, allowing different games to be played with interchangeable cartridges.

The terminology of video games also originated in this period: the term "video game" was developed to distinguish electronic games displayed on a video screen from those using teletype printers or speakers. Coin-operated arcade cabinets quickly conquered public spaces, and by around 1980, it became common practice to create home versions of successful arcade games—as was the case with the famous Space Invaders. The VCS's two main competitors were the Mattel Intellivision (1980) and the ColecoVision (1982), laying the groundwork for the console wars that would characterize the industry for decades.

The First Software for Personal Computers – From Games to Office Applications

The true potential of personal computers was only realized when, in addition to raw hardware, the first usable software appeared, making these machines accessible even to users with no programming experience. The early software palette was extremely simple: the machines came with a BASIC interpreter by default, allowing users to write and run simple programs. Tandy Corporation computers, for example, already included a keyboard and CRT monitor in 1977, and users could store their programs and data on cassette tapes.

The real turning point came in 1979 with the appearance of VisiCalc, the first spreadsheet program, which revolutionized the use of personal computers. This software enabled people with no programming experience to solve serious and complex problems with their computers—a development that was crucial to the explosive spread of microcomputers. Software manufacturers quickly recognized market needs and began developing programs for word processing, data management, and drawing. The range of tasks performed on personal computers soon expanded to include document creation, resume writing, and various office tasks, establishing the practice that computers were not just tools for specialists but everyday work tools for average users as well.

The Early IT Startups – Entrepreneurs and Companies in the 1970s

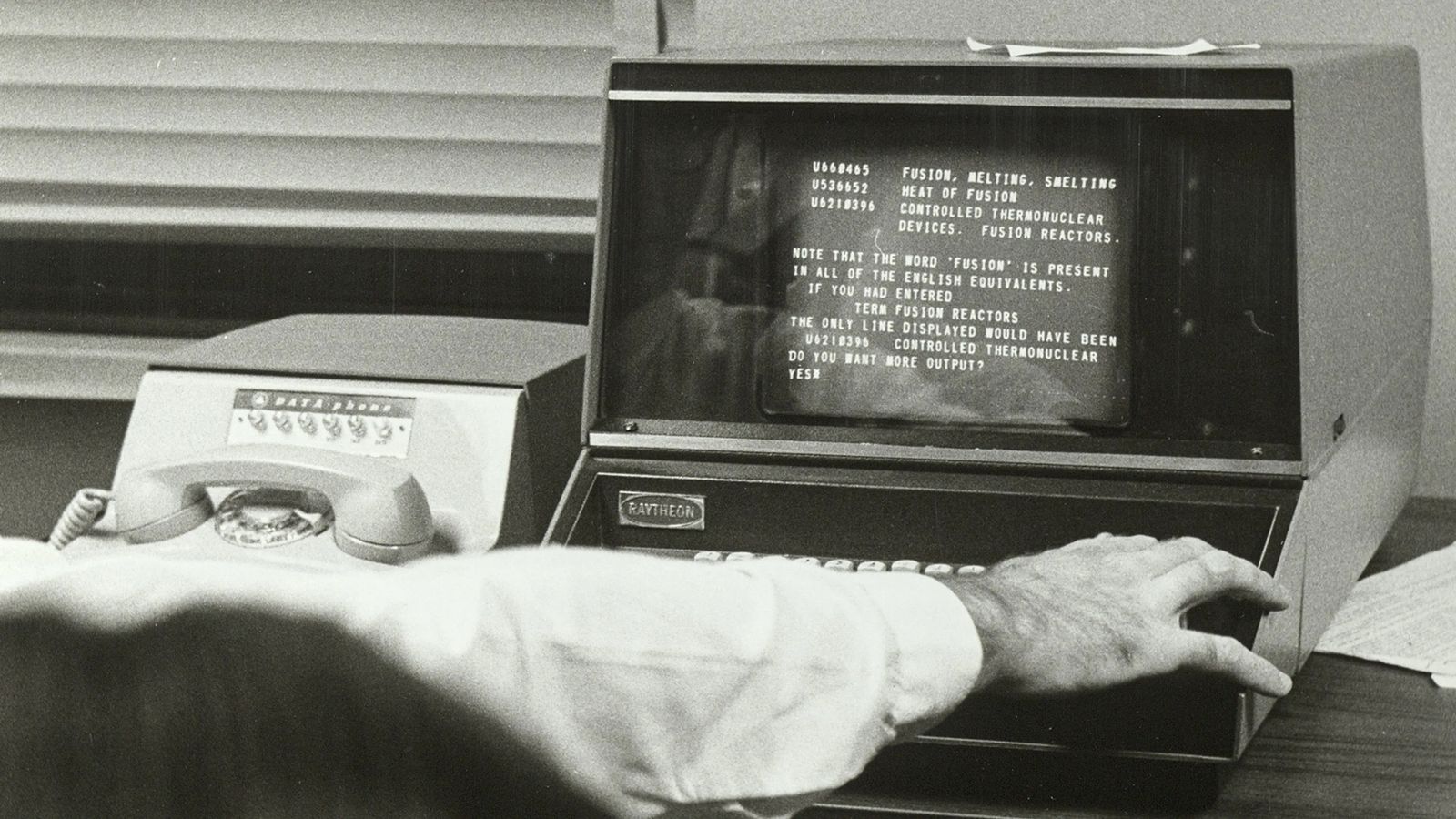

During the 1970s, many entrepreneurs recognized the commercial potential of computers and founded the companies that would later become giants of the IT industry. The widespread adoption of minicomputers in the mid-1970s opened up new opportunities for entrepreneurs who developed text and document search software for these platforms. Significant companies such as BASIS (Battelle Institute) and INQUIRE (Infodata) were founded during this period, while in the UK, innovative companies such as ASSASSIN (ICI), STATUS (Atomic Weapons Research Establishment), CAIRS (Leatherhead Food Research Association), and DECO (Unilever) emerged.

The most significant milestone of the decade came in 1970, when IBM launched the STAIRS (Storage and Information Retrieval System), marking the beginning of commercial enterprise search software. This product was designed for multi-user time-sharing applications and remained part of IBM's product line until the early 1990s. The entrepreneurial spirit did not stop there: in 1985, Advanced Decision Systems was founded in San Jose, developing artificial intelligence applications, and later spun off Verity, which, under the leadership of David Glazer and Philip Nelson, introduced a revolutionary probabilistic search engine. Verity's uniqueness was its platform independence, unlike IBM STAIRS, and a multi-user license cost $39,500 in the late 1990s.

The Role of Education and Research in IT Development

University research institutes and higher education institutions played a key role in the IT revolution of the 1970s, providing the scientific background and trained professionals for the emerging industry. Experimental prototypes developed at the University of California in the 1970s practically proved the viability of relational database management systems, while IBM's research division worked on similar solutions in parallel. These research projects were not only of theoretical significance but also had a direct impact on commercial product development and the formation of industry standards.

The role of higher education institutions went beyond mere research: they trained the programmers, system designers, and entrepreneurs who later founded the era's leading technology companies. Information management as a scientific field began to crystallize during this period, and although many initially saw it as a rebranding of traditional library science, it soon became clear that a completely new discipline was emerging. The theoretical framework and practical research opportunities provided by educational institutions together created the knowledge base on which the software industry that emerged by the late 1970s could rely.

The Emergence of Programming Culture – Communities and Hobbyists

A completely new type of community culture began to develop in the programming world of the 1970s, driven by a passion for technology and the need for collaborative problem-solving. At this time, most programmers did not have their own computers but used expensive mainframe systems at work or university computing centers, where they often had to share computing resources with other users. This limited access paradoxically strengthened the solidarity of the programming community: developers were forced to collaborate, share knowledge, and find creative solutions to technical challenges.

One of the most characteristic features of programming culture in this period was that code searching and documentation activities were already a fundamental part of development work—a 1997 study found that code searching was the most common activity among programmers. In the era of punch cards, programmers paid special attention to code quality and efficiency, as rerunning a faulty program could take hours or even days. Community learning and mentor-apprentice relationships were extremely important, especially when the emergence of the C programming language enabled the transition from assembly language, significantly facilitating the development process.

The Role of Women in the IT Industry of the 1970s – Challenges and Achievements

Ironically, the 1970s was one of the most challenging periods in IT history for women, even though they had previously dominated the programming profession. After World War II, women continued to play a significant role in computing—the Apollo missions of the 1960s succeeded only thanks to Margaret Hamilton's software programming and the circuit assembly skills of Navajo women. However, by the late 1970s, as the commercial value and prestige of software development became apparent, governments and companies recognized the strategic importance of computers in high-level positions.

This decade brought a tragic turn: women were gradually pushed out of technological positions and replaced by men in more modern, higher-status jobs, even though the work itself remained essentially unchanged. Programming, previously considered "women's work" due to attention to detail and patience, suddenly became a "masculine" expert field when its true complexity and economic potential became clear. The introduction of aptitude tests and personality profiles further worsened the situation, as these favored stereotypically masculine traits, permanently changing the demographic composition of the profession.

The Social Impact of the IT Industry – How It Changed the World of Work

The IT developments of the 1970s went far beyond mere technological innovations, initiating social changes whose impact is still felt in the world of work today. The first wave of automation and computerization already outlined the pattern that would later be repeated in the age of artificial intelligence: while certain jobs disappeared, new types of occupations emerged that required higher levels of education and different skills. Historical experience shows that technological revolutions always create more jobs than they eliminate, although this is accompanied by significant transitional difficulties and social tensions.

By the end of the decade, it was clear that computers would not only be the privilege of large corporations but would gradually transform every sector. Workers had to prepare for the automation of tasks previously performed by hand, while new opportunities opened up in creative and intellectual jobs. This paradigm shift particularly affected office workers, for whom the computer gradually became an essential work tool, changing not only workflows but also attitudes toward work itself. Despite the adaptation challenges, the 1970s proved that workers who mastered new technologies could gain a significant competitive advantage in the labor market.

Security Initiatives at the Dawn of Computing – The First Viruses and Defenses

By the late 1970s, as computers became more widespread, information security also came to the fore, even though the concept of cybersecurity as we know it today was still in its infancy. The first computer viruses appeared in this decade, although they were more experimental than malicious attacks. The "Creeper" virus appeared on the ARPANET in 1971, simply displaying the message: "I'm the creeper, catch me if you can!"—thus beginning the decades-long race between viruses and antivirus programs.

Defense mechanisms developed closely alongside the emergence of threats. The first antivirus program, "Reaper," was created specifically to counter the Creeper virus, demonstrating the principle that every new security challenge requires a technological response. The basics of authorization management and access control in mainframe systems also developed during this period, as security in multi-user environments became critical. Although personal computers were not yet widespread, protecting central computers already required password authentication, user rights management, and ensuring data integrity—concepts that later became the cornerstones of modern information security.

The Emergence of IT Standards – Communication and Compatibility Issues

By the late 1970s, it became clear that the lack of communication and compatibility between computer systems was a serious obstacle to IT development. Different manufacturers developed their products according to their own standards, resulting in a heterogeneous environment where incompatibility hindered the flow of information between different parts of organizations. This problem particularly affected companies using multiple systems in parallel, as developing and maintaining interfaces between incompatible subsystems could account for half of the total IT budget.

The first steps toward standardization appeared in the field of communication protocols, where innovative solutions such as CANopen communication objects were developed. These protocols defined three basic communication models: master-slave, client-server, and producer-consumer architectures, enabling flexible data transfer between different devices. The roots of later information security standards also go back to this period, when it was recognized that standardizing data protection and system security was essential for sustainable technological development. The use of standard solutions thus became crucial for reducing long-term integration costs and for the strategic planning of corporate technology systems.

The First Computer Networks – The Emergence of LAN and WAN

The emergence of the first computer networks in the late 1970s fundamentally changed the paradigm of using IT tools, as previously isolated computers could now communicate and share resources. The development of local area networks (LANs) was directly linked to the spread of personal computers—while previously a central computer was accessed by simple terminals over slow lines, the new concept used high-speed connections to link multiple computers within a single network. The first LANs appeared in the late 1970s, when the advent of Ethernet and ARCNET technologies enabled efficient local communication.

The development of wide area networks (WANs) took place in parallel with LAN technologies but served completely different purposes. Based on Paul Baran's 1968 proposal, the packet-switched system revolutionized data transmission when, in 1969, four American universities—UCLA, Stanford, UC Santa Barbara, and Utah University—created the ARPANET network with 50 kbit/s connections. This military-origin technology, originally intended to connect radar stations and ensure communication in the event of a nuclear attack, later became the foundation of the Internet. The uniqueness of WANs lay in their ability to connect computers through multiple access routes, with the network itself determining the optimal path for data transmission.

The Economic Impact of the IT Industry by the Late 1970s

The effects of the technological revolution in the late 1970s went beyond the borders of developed countries, laying the groundwork for the second phase of global financial integration. This period, spanning about 30 years from 1970, was characterized by the integration of post-socialist countries into international markets. As a result of political changes, the volume of international investment naturally increased, stimulated by the immediate and rapid adaptation of new IT inventions—especially computers and the Internet.

The global economic implications of information technology development were particularly evident in the structure of corporate investments: while in 1955 companies spent only 7 percent on IT, by the 1980s this figure was around 30-40 percent. However, as technology spread, a paradoxical situation arose—the first innovators gradually lost their competitive advantage, were forced to bring their prices closer to real production value, resulting in increased unit labor costs and declining profit rates. This process was felt worldwide, changing the dynamics of international capital flows and paving the way for later economic crises.

The Impact of Technological Innovations on Later Decades

The technological innovations of the 1970s had far-reaching consequences for the following decades, laying the foundation for what economists call "creative destruction." According to the phenomenon described by Schumpeter, radical innovations not only create new industries but also completely reshape the structure of the economy. From the 1990s onward, technological development had an increasingly serious impact on business processes and economic life, transforming professional thinking about competitiveness and emphasizing the concept of sustainable competitive advantage.

The contribution of technical progress to economic growth is empirically verifiable: in Western European countries, this contribution ranged from 60 to 67 percent between 1994 and 2000, while in the United States it exceeded 70 percent. This trend began with the innovations of the 1970s, when fundamental technologies appeared that later revolutionized every industry. The cyclical nature of technological paradigm shifts means that new, dynamically developing industries emerge, changing the entire market and society. The ongoing fifth industrial revolution has its roots directly in the computing breakthroughs of the 1970s, when IT and cybersecurity already became key issues—today, in the era of the Internet of Things, these are even more critical.

Curiosities and Lesser-Known Stories from the IT World of the 1970s

The IT world of the 1970s saw many curiosities and lesser-known developments that already foreshadowed the fundamental features of today's digital age. It is particularly interesting that as early as 1877, Edison invented the phonograph, originally intended only as a dictation machine, but also capable of recording, and in the 1970s this technological precursor inspired early experiments in digital audio recording. The general scenario of communication technology revolutions is that every "revolutionary" technology follows a similar pattern from idea to mass adoption: the theoretical concept is followed by prototypes, then a successful, widespread version—which often contains hidden technologies, just as the telegraph anticipated the telephone, the telephone the radio, and the computer the Internet.

One of the most interesting phenomena of the decade was the emergence of the concept of "electronic media packages," where each era had its own media package served by the most advanced technology of its time. In the 1970s, this meant the age of television, where the discovery of the transistor miniaturized devices, FM modulation brought FM radio broadcasting and stereo sound, while radio was forced to specialize as television gained ground. Another peculiarity of the era was that while previously every communication device was counted per household, the mobile phone became the first technology associated with a person rather than a home, foreshadowing the era of personal IT devices.

Early Visions of the Future of the IT Industry from the 1970s

The IT developments of the 1970s already contained the technological elements that today form the basis of Industry 4.0 and digital transformation. The appearance of numerically controlled (NC) machine tools and the first CNC (Computer Numeric Control) systems in the mid-1970s directly anticipated today's cyber-physical systems. The invention of the microprocessor in the early 1970s gave explosive momentum not only to production but also to design, laying the foundation for the culture of computer-based data processing, design, and control that today forms the backbone of smart factories.

By the end of the decade, new fields had developed—such as computer-aided design (CAD), computer-aided manufacturing (CAM), and computer-integrated manufacturing (CIM)—which already outlined the concept of modern automated manufacturing processes. It is particularly noteworthy that by 1986, AutoCAD had become the world's most widely used design application, a position it still holds today, demonstrating the long-term impact of software development decisions made in the 1970s. The early appearance of flexible manufacturing systems (FMS) and enterprise resource planning (ERP) already foreshadowed the integrated management approach that is now central to Industry 4.0 digitalization efforts.

Why It Is Important to Know the IT Industry Stories of the 1970s

Learning about the history of IT in the 1970s is key to understanding today's technological development, as this decade created fundamental paradigms and patterns that still define the operation of the IT sector. The lessons of the era are especially valuable for today's entrepreneurs and technology professionals, who face similar challenges: how to turn radically new technologies into commercial successes, how to build sustainable business models, and how to manage the social impacts of technological change. The 1970s proved that every major technological breakthrough is backed by decades of research and development, and successes often come from unexpected directions—just as Edison's phonograph led to digital audio recording, or the ARPANET military network led to the Internet.

Studying the events of the decade also highlights that the social consequences of technological revolutions often go far beyond the original intentions. The example of Ragusan merchants in the 16th-17th centuries already demonstrated how to build successful business strategies based on misunderstandings between different legal and cultural systems—similar to how IT pioneers of the 1970s exploited information asymmetries between traditional industries and regulatory environments. Understanding the cyclical nature of IT history helps prepare for future challenges: today's artificial intelligence revolution brings the same labor market shifts and social tensions as the automation wave of the 1970s, but historical experience shows that in the long run, more jobs are always created than lost.